Honda partner Helm.ai unveils system for automated driving

According to the company, Helm.ai Vision provides enhanced all-round perception, which reduces the need for HD maps and lidar sensors in systems up to Level 2+, and enables automated driving up to Level 3.

That means the software is not suitable for so-called robotaxis, which typically operate at Level 4. However, Helm.ai says the technology is ready for mass-market adoption and could be integrated into privately owned vehicles such as the aforementioned future Honda EVs.

To clarify: Level 3, also referred to as highly automated driving, allows the vehicle to take over certain driving tasks independently, while the driver remains present but does not need to constantly monitor the system. The driver must, however, be able to take back control at the system’s request. For instance, Mercedes-Benz already offers Level 3 functions in the EQS and S-Class under the optional Drive Pilot system, enabling highly automated motorway driving at speeds of up to 95 kph.

Level 2+, by contrast, is a form of semi-automated driving that goes beyond Level 2 by offering features that resemble those of Level 3. However, the driver remains fully responsible for the driving task and must supervise the system at all times.

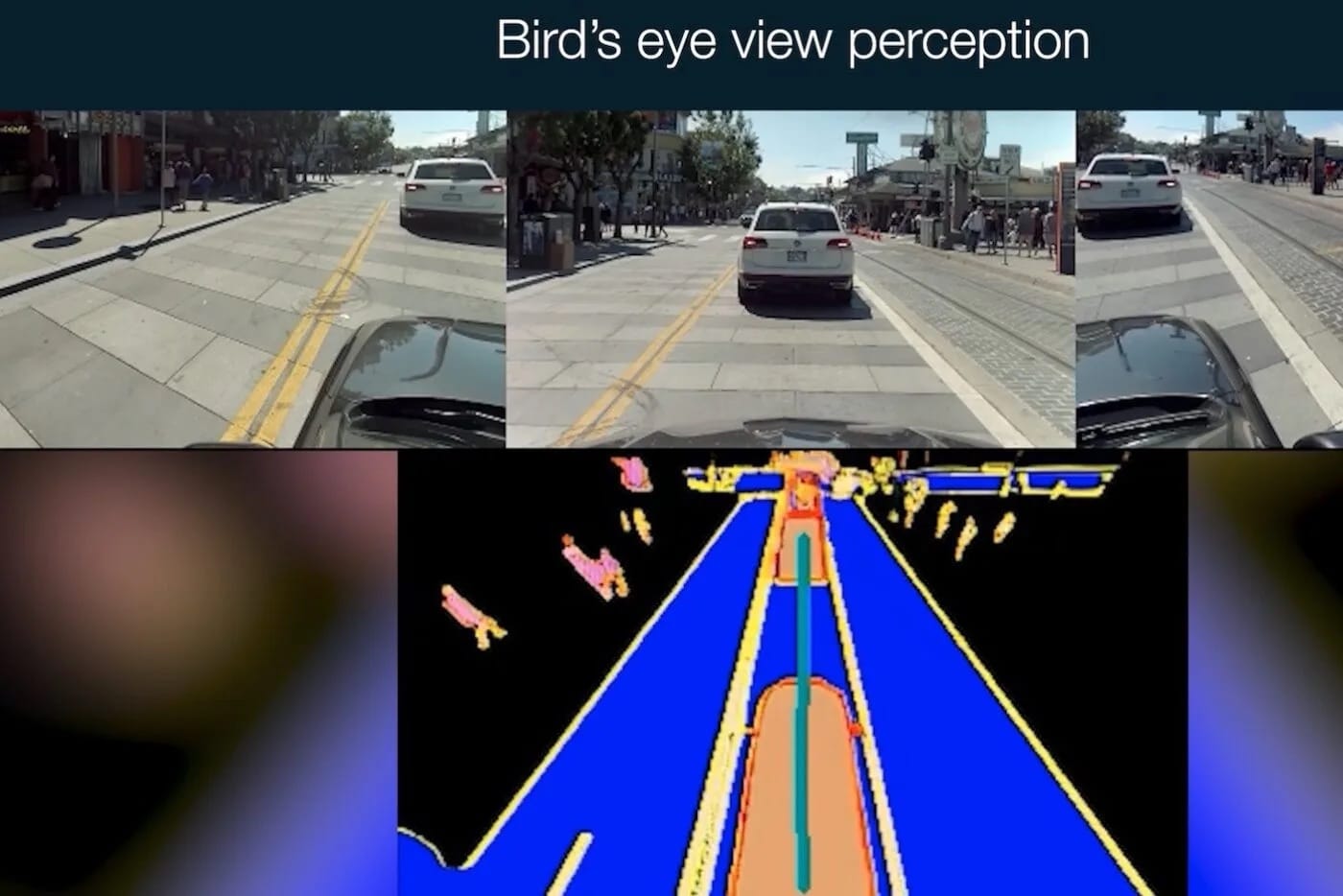

Helm.ai’s system employs a proprietary technology known as Deep Teaching, which enables extensive, unsupervised learning from real-world driving data, reducing dependence on expensive, manually annotated datasets. The system is designed to interpret the complexities of urban driving, such as heavy traffic, varying road conditions, and unpredictable behaviour from pedestrians and vehicles. It offers real-time 3D object detection, full semantic segmentation, and multi-camera surround view fusion, allowing the vehicle to accurately interpret its surroundings. Additionally, Helm.ai Vision generates a bird’s-eye view by merging input from multiple cameras into a single spatial map.

“Robust urban perception, which culminates in the BEV fusion task, is the gatekeeper of advanced autonomy,” said Vladislav Voroninski, CEO and founder of Helm.ai. “Helm.ai Vision addresses the full spectrum of perception tasks required for high end Level 2+ and Level 3 autonomous driving on production-grade embedded systems, enabling automakers to deploy a vision-first solution with high accuracy and low latency. Starting with Helm.ai Vision, our modular approach to the autonomy stack substantially reduces validation effort and increases interpretability, making it uniquely suited for nearterm mass market production deployment in software defined vehicles.”

According to Reuters, the technology is expected to be deployed in Honda’s new 0 Series starting in 2026. The Japanese carmaker had presented prototypes of the series in January at CES in Las Vegas. Honda has been an investor in Helm.ai since 2022. Speaking to the news agency, Voroninski said: “We’re definitely in talks with many OEMs and we’re on track for deploying our technology in production.” He added: “Our business model is essentially licensing this kind of software and also foundation model software to the automakers.”

0 Comments